Maximizing API Performance: Strategies for Speed, Scalability, and Reliability

In today's digital landscape, APIs (Application Programming Interfaces) play a crucial role in facilitating communication between different software systems, enabling seamless data exchange and integration. However, as APIs become increasingly central to modern software development, ensuring optimal performance is paramount. In this comprehensive guide, we'll explore a myriad of strategies and best practices for maximizing API performance, covering everything from infrastructure optimization to code-level improvements.

Understanding API Performance:

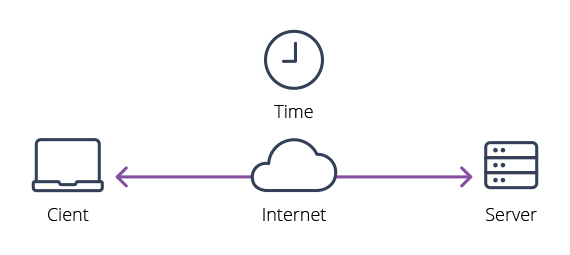

API performance refers to the efficiency and responsiveness of an API in handling incoming requests, processing data, and delivering timely responses to clients. Key metrics used to measure API performance include response time, throughput (requests per second), latency, error rates, and scalability.

1. Infrastructure Optimization:

Infrastructure optimization is a critical aspect of maximizing API performance, focusing on streamlining the underlying hardware and software environment to enhance efficiency and scalability. This involves implementing caching mechanisms to store frequently accessed data, load balancing to evenly distribute incoming requests across multiple server instances, and horizontal scaling to dynamically adjust capacity based on demand. By optimizing infrastructure components such as servers, databases, and networking resources, organizations can ensure optimal resource utilization, minimize latency, and enhance fault tolerance, ultimately delivering faster and more reliable API services to users.

Implement caching mechanisms to store frequently accessed data in memory or a distributed cache, reducing the need to fetch data from the backend on every request. Caching is a powerful technique used to store frequently accessed data in memory or a distributed cache, reducing the need to fetch data from the backend on every API request. By caching responses at various layers of the application stack, such as at the API gateway, middleware, or database layer, organizations can significantly improve API performance and scalability. At the API gateway level, caching can be implemented to store responses to commonly requested endpoints, reducing the load on backend services and improving overall response times. Middleware caching, such as in-memory caching or Redis caching, can be employed to store intermediate results or computed data, avoiding redundant computations and accelerating response generation. Additionally, caching at the database layer can involve caching query results or frequently accessed data objects, minimizing the need for expensive database queries and enhancing database performance. However, it's essential to strike a balance between caching too aggressively, which can lead to stale data or memory bloat, and not caching enough, which undermines the performance benefits. Regular cache invalidation and expiration policies should be implemented to ensure data freshness and cache efficiency.

Load Balancing:Use load balancers to distribute incoming API requests across multiple server instances or containers, ensuring even distribution of workload and preventing any single server from becoming a bottleneck. Load balancing is pivotal for optimizing API performance in distributed systems, where multiple instances of API services handle incoming requests. By evenly distributing traffic across these instances, load balancers ensure optimal resource utilization, maximize throughput, and enhance fault tolerance and scalability. Employing dedicated load balancers as reverse proxies in front of API servers, incoming requests are routed based on predefined algorithms like round-robin or least connections. These load balancers also conduct health checks on backend servers, automatically rerouting traffic away from unhealthy servers to maintain seamless service availability. With advanced features such as SSL termination and content-based routing, load balancers offer fine-grained traffic management and can be deployed redundantly to eliminate single points of failure, ensuring continuous operation even during hardware or software failures.

Horizontal Scaling:Scale API infrastructure horizontally by adding more server instances or containers to handle increased traffic, using auto-scaling mechanisms to dynamically adjust capacity based on demand. Horizontal scaling, also known as scaling out, involves adding more machines or instances to the existing pool of servers to handle increased workload or traffic. In the context of API performance optimization, horizontal scaling enables the distribution of incoming requests across multiple servers, effectively reducing the burden on individual servers and improving overall system responsiveness. This approach is particularly beneficial for APIs experiencing rapid growth or sudden spikes in demand, as it allows organizations to scale their infrastructure dynamically to meet evolving requirements. By horizontally scaling API servers, organizations can achieve improved fault tolerance, resilience, and scalability without the limitations of vertical scaling, where increasing the resources of a single server reaches practical constraints. Horizontal scaling leverages the inherent parallelism of distributed systems, enabling linear scalability as additional servers are added to the cluster. Moreover, modern container orchestration platforms like Kubernetes facilitate the seamless deployment and management of horizontally scaled API services, offering features such as automatic scaling based on resource usage metrics and efficient workload distribution across nodes. Through horizontal scaling, organizations can ensure consistent API performance, even under varying loads, while maintaining flexibility and cost-effectiveness in resource allocation.

Content Delivery Networks (CDNs):Utilize CDNs to cache and serve static assets and API responses from edge locations closer to end users, reducing latency and improving response times for geographically distributed clients. Content Delivery Networks (CDNs) play a pivotal role in optimizing API performance by reducing latency and enhancing content delivery speed for end-users across the globe. CDNs consist of a network of strategically distributed edge servers located in various geographic locations, allowing them to cache and serve content from locations closer to end-users. When an API request is made, the CDN routes the request to the nearest edge server, minimizing the distance data needs to travel and thus reducing latency. This proximity-based routing ensures that users experience faster response times, regardless of their geographical location, leading to an overall improvement in API performance and user satisfaction. Additionally, CDNs offer other performance-enhancing features such as caching, content compression, and TCP optimization, further boosting API performance. By caching frequently accessed API responses and static assets at edge servers, CDNs can serve content directly from cache, eliminating the need for repeated requests to origin servers. This not only reduces server load but also accelerates content delivery, particularly for static content that does not frequently change. Moreover, CDNs employ techniques like content compression and TCP optimization to minimize file sizes and optimize network protocols, respectively, resulting in faster data transmission and improved overall API performance. By leveraging CDNs, organizations can effectively mitigate latency issues, enhance scalability, and deliver superior user experiences for their API-driven applications.

2. Database Optimization:

Database optimization involves fine-tuning database configurations, queries, and indexing strategies to improve the efficiency and performance of data retrieval and processing operations. This includes indexing frequently accessed fields, minimizing the use of expensive operations, and avoiding unnecessary joins to optimize query performance. Additionally, database profiling tools are used to identify slow queries, analyze query execution plans, and optimize database schema and indexing strategies for better performance. By optimizing database operations, organizations can reduce query execution times, minimize resource consumption, and enhance the overall responsiveness of their API-driven applications, ultimately delivering a superior user experience.

Optimize database queries by indexing frequently accessed fields, minimizing the use of expensive operations, and avoiding unnecessary joins to improve query performance. Database optimized queries are instrumental in improving API performance by ensuring efficient data retrieval and processing. By crafting queries that leverage database indexes, minimize unnecessary data fetching, and optimize joins and aggregations, developers can significantly reduce query execution time and resource consumption. Utilizing appropriate indexing strategies, such as indexing columns frequently used in WHERE clauses or JOIN conditions, enhances query performance by facilitating rapid data retrieval based on specific criteria. Furthermore, implementing techniques like query caching and result set pagination helps minimize database load and network overhead, especially in scenarios involving large datasets or high query volumes. Additionally, developers can optimize queries by avoiding expensive operations like nested subqueries or excessive table scans, optimizing database schema design to eliminate redundancy and normalize data structures effectively. By adopting these best practices and fine-tuning query execution plans, developers can ensure that API endpoints retrieve and process data swiftly and efficiently, ultimately delivering better performance and responsiveness to end-users. b. Database Profiling: Use database profiling tools to identify and address slow queries, analyze query execution plans, and optimize database schema and indexing strategies for better performance.

Database Profiling:Use database profiling tools to identify and address slow queries, analyze query execution plans, and optimize database schema and indexing strategies for better performance. Database profiling involves analyzing the performance of database operations to identify bottlenecks, inefficiencies, and opportunities for optimization. By tracking metrics such as query execution time, resource utilization, and database locks, developers gain insights into the underlying factors affecting database performance. Profiling tools and techniques enable developers to pinpoint slow-performing queries, inefficient indexing strategies, or resource-intensive operations, allowing them to optimize database configuration, query execution plans, and application code accordingly. Additionally, database profiling facilitates proactive monitoring and tuning of database performance over time, ensuring that applications maintain optimal performance levels as data volumes and user loads evolve. Through systematic profiling and analysis, developers can enhance database performance, improve application responsiveness, and deliver a seamless user experience.

3. Code-Level Optimization:

Code-level optimization, a crucial aspect of maximizing software performance, involves refining and enhancing the efficiency of application code to deliver faster response times and better scalability. This process encompasses identifying and rectifying inefficiencies in algorithms, loops, and data structures, as well as utilizing performance profiling tools to pinpoint critical code paths for optimization. By streamlining and fine-tuning the codebase, developers can significantly improve the performance of their applications, ensuring smoother execution and enhanced user experiences.

Offloading long-running or resource-intensive tasks to background workers or queues is a powerful technique for improving API performance. By employing asynchronous processing, tasks that don't require immediate responses, such as sending emails or processing large datasets, can be handled without impacting the responsiveness of the API. Asynchronous processing improves overall throughput by allowing the API to continue serving requests while these tasks are executed in the background, enhancing the scalability and responsiveness of the system.

API Response Optimization:Optimizing the size and structure of API responses is crucial for enhancing performance and reducing network latency. One approach is to minimize the size of API responses by returning only essential data fields required by the client application. Additionally, using efficient data serialization formats such as JSON or Protocol Buffers can further reduce response size and transmission overhead. Furthermore, avoiding unnecessary metadata or large payloads helps streamline communication between the client and server, resulting in faster response times and improved overall performance.

Code Optimization:Reviewing and optimizing API code for performance is essential for ensuring efficient operation and scalability. By identifying and refactoring inefficient algorithms, loops, or data structures, developers can significantly improve the performance of their APIs. Utilizing performance profiling tools to identify hotspots and optimize critical code paths enables developers to pinpoint areas for improvement and implement targeted optimizations. By continuously refining and optimizing the codebase, developers can enhance API performance, deliver faster response times, and improve the overall user experience.

4. Network Optimization:

Network optimization is essential for enhancing the performance and reliability of digital systems, particularly in the realm of web services and APIs. This multifaceted approach involves fine-tuning various networking components to reduce latency, increase throughput, and streamline data transmission. One pivotal aspect of network optimization is leveraging modern protocols like HTTP/2, which introduces advanced features such as multiplexing and header compression to facilitate faster and more efficient communication between clients and servers. Additionally, optimizing TCP connection settings, such as adjusting window sizes and congestion control algorithms, can significantly enhance data transmission efficiency over network connections. Furthermore, minimizing DNS lookup times through techniques like caching and leveraging fast DNS servers is crucial for reducing latency and improving overall network responsiveness. By implementing these optimization strategies, organizations can ensure smoother and more responsive network operations, ultimately delivering superior user experiences and performance for web applications and APIs.

Enabling HTTP/2 is a significant step towards improving network performance. By leveraging features such as multiplexing and header compression, HTTP/2 allows multiple requests and responses to be transmitted simultaneously over a single TCP connection. This reduces the latency associated with establishing multiple connections and decreases the overhead of redundant headers, resulting in faster loading times for web pages and API responses. Additionally, HTTP/2's support for server push enables servers to proactively send resources to clients before they are requested, further optimizing performance by reducing round-trip times.

TCP Optimization:Optimizing TCP connection settings is essential for maximizing network throughput and minimizing latency. Fine-tuning parameters such as TCP window size, congestion control algorithms, and connection timeout values can significantly improve the efficiency of data transmission over TCP connections. By adjusting these settings based on network conditions and application requirements, developers can reduce connection overhead, mitigate packet loss, and enhance overall network performance. Additionally, implementing techniques such as TCP Fast Open and TCP Fast Retransmit can further improve responsiveness and throughput, particularly for short-lived connections and high-latency networks.

DNS Optimization:Minimizing DNS lookup times is crucial for reducing latency and improving network responsiveness. By using fast and reliable DNS servers, organizations can ensure quick resolution of domain names to IP addresses, reducing the time required to establish connections to remote servers. Implementing techniques such as DNS caching at the local level or leveraging DNS services provided by content delivery networks (CDNs) can further optimize DNS resolution times and improve overall network performance. Additionally, reducing the TTL (time-to-live) values of DNS records helps to keep DNS caches up-to-date and ensures that clients receive the most current IP addresses for domain names, further enhancing network efficiency.

5. Monitoring and Profiling:

Monitoring and profiling are indispensable practices for ensuring the optimal performance of APIs in today's dynamic digital landscape. Monitoring tools play a crucial role in providing real-time insights into key performance metrics such as response times, error rates, and resource utilization, enabling developers to swiftly detect anomalies and preemptively address potential issues. By continuously tracking these metrics, teams can effectively manage resources, plan capacity, and scale infrastructure to meet evolving demands efficiently. On the other hand, performance profiling offers a deeper understanding of API execution behavior by analyzing factors like request processing times and memory consumption. This allows developers to identify performance bottlenecks, optimize code, and fine-tune resource utilization for enhanced scalability and responsiveness. Together, monitoring and profiling empower teams to make informed decisions, prioritize optimization efforts, and ensure the seamless operation of API-driven applications.

Employing monitoring tools is essential for gaining insights into the performance of an API. These tools track various key performance indicators such as response times, error rates, throughput, and resource utilization in real-time. By continuously monitoring these metrics, developers can quickly identify any deviations from expected behavior and proactively address potential issues before they escalate. Additionally, monitoring tools provide valuable data for capacity planning and optimization efforts, allowing teams to make informed decisions about resource allocation and infrastructure scaling to meet evolving demands effectively.

Performance Profiling:Performance profiling is a critical aspect of optimizing API performance. By employing profiling techniques, developers can gain deep insights into the execution behavior of their code, identify performance bottlenecks, and pinpoint areas for improvement. Profiling tools analyze factors such as request processing times, memory consumption, CPU usage, and database query performance to identify inefficiencies and areas of optimization. Armed with this information, developers can refactor code, optimize algorithms, and fine-tune resource utilization to enhance scalability, reliability, and responsiveness. Additionally, performance profiling enables teams to prioritize optimization efforts based on the most significant impact on overall system performance, ensuring that limited resources are allocated effectively to address the most critical issues.

In conclusion, maximizing API performance is a multifaceted endeavor that requires a holistic approach encompassing infrastructure optimization, database tuning, code-level improvements, network optimization, and diligent monitoring and profiling. By implementing the strategies outlined in this guide and continuously monitoring performance metrics, organizations can ensure that their APIs deliver optimal speed, scalability, and reliability, enabling seamless integration and superior user experiences in today's digital ecosystem.

Thanks a lot for reading this article. If you like this post, please subscribe to our newsletter to get your weekly dose of financial advice straight into your inbox. Follow us on Twitter for regular updates!

2 Comments

Jordan Singer

2d2 replies

Santiago Roberts

4d